Chinese room argument

Publié le 22/02/2012

Extrait du document

«

alone.

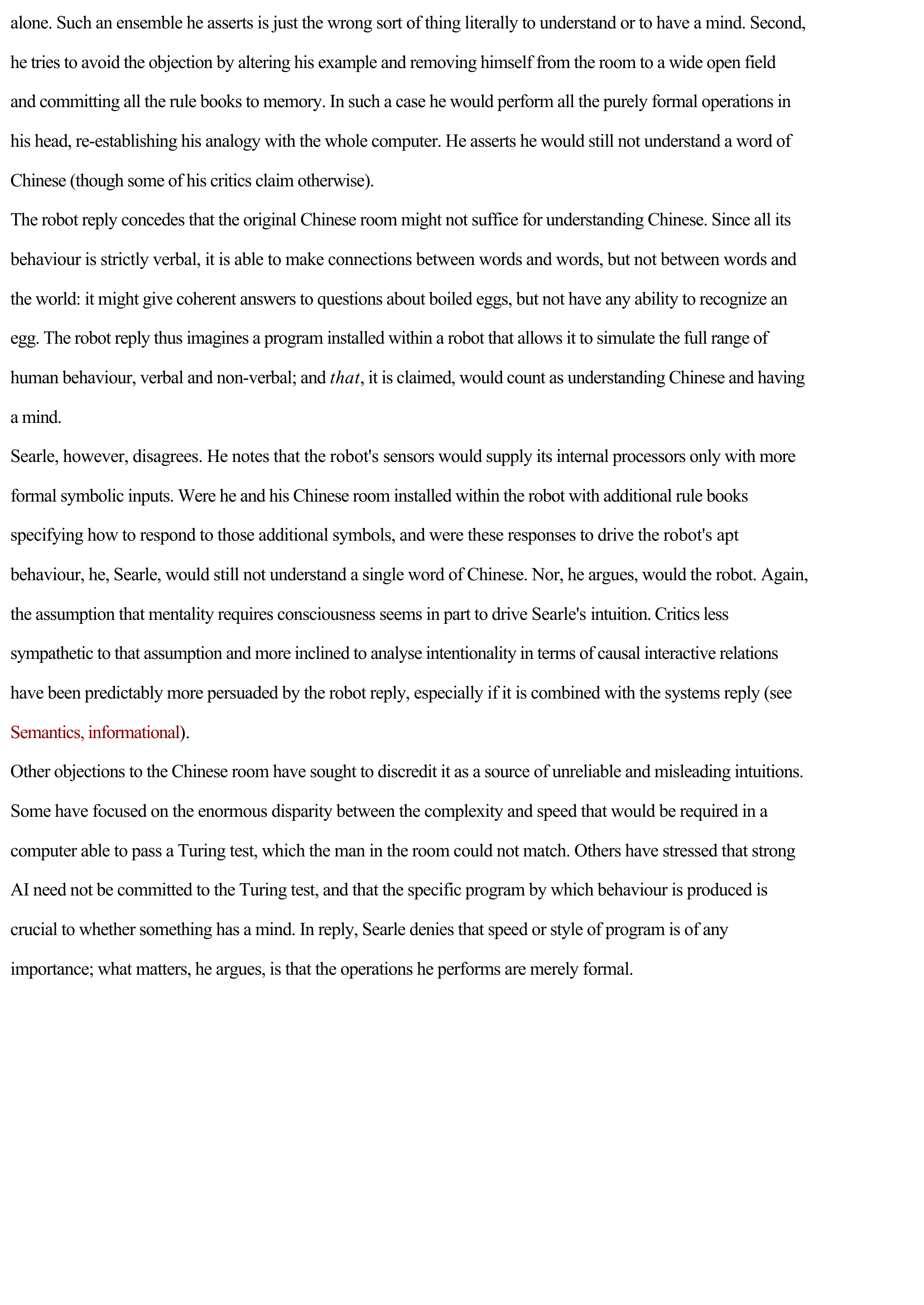

Such an ensemble he asserts is just the wrong sort of thing literally to understand or to have a mind.

Second,

he tries to avoid the objection by altering his example and removing himself from the room to a wide open field

and committing all the rule books to memory.

In such a case he would perform all the purely formal operations in

his head, re-establishing his analogy with the whole computer.

He asserts he would still not understand a word of

Chinese (though some of his critics claim otherwise).

The robot reply concedes that the original Chinese room might not suffice for understanding Chinese.

Since all its

behaviour is strictly verbal, it is able to make connections between words and words, but not between words and

the world: it might give coherent answers to questions about boiled eggs, but not have any ability to recognize an

egg.

The robot reply thus imagines a program installed within a robot that allows it to simulate the full range of

human behaviour, verbal and non-verbal; and that , it is claimed, would count as understanding Chinese and having

a mind.

Searle, however, disagrees.

He notes that the robot's sensors would supply its internal processors only with more

formal symbolic inputs.

Were he and his Chinese room installed within the robot with additional rule books

specifying how to respond to those additional symbols, and were these responses to drive the robot's apt

behaviour, he, Searle, would still not understand a single word of Chinese.

Nor, he argues, would the robot.

Again,

the assumption that mentality requires consciousness seems in part to drive Searle's intuition.

Critics less

sympathetic to that assumption and more inclined to analyse intentionality in terms of causal interactive relations

have been predictably more persuaded by the robot reply, especially if it is combined with the systems reply (see

Semantics, informational ).

Other objections to the Chinese room have sought to discredit it as a source of unreliable and misleading intuitions.

Some have focused on the enormous disparity between the complexity and speed that would be required in a

computer able to pass a Turing test, which the man in the room could not match.

Others have stressed that strong

AI need not be committed to the Turing test, and that the specific program by which behaviour is produced is

crucial to whether something has a mind.

In reply, Searle denies that speed or style of program is of any

importance; what matters, he argues, is that the operations he performs are merely formal..

»

↓↓↓ APERÇU DU DOCUMENT ↓↓↓

Liens utiles

- CHAMBRE -DE JACOB (La) [Jacob’s Room]. (résumé & analyse) de Virginia Woolf

- RENAISSANCE CHINOISE (La) [The Chinese Renaissance]. Hou-che (résumé)

- La version cartésienne de l'argument ontologique R. DESCARTES

- L'ARGUMENT ONTOLOGIQUE

- Celui qui connaît seulement son propre argument dans une affaire en connaît peu de chose.